Xmm

Data

Always use the remeis archives at /eu/X-ray/XMM/data/ instead of downloading observation files yourself. You can search for the data of an observation by typing something like find /eu/X-ray/xmm/data/????/obsID/odf -type d - replacing obsID with your observation ID. If the observation you want to analyze is not in the archives: contact the XMM admin (Jonathan Knies) and the observation will be downloaded for you.

XMM EPIC Data Extraction

After the system was updated from Ubuntu 11 to Ubuntu 12 a problem occured with the function "dssetattr" inside the "epchain". This function can set/add an attribute to the HDU (Header/Data Unit). The solution is to call 'source $SOFTDIR/sas_init.csh' at the beginning of each script in order to initialize SAS inside your script.

A little example script:

#!/bin/csh

source $SOFTDIR/sas_init.csh # initialize the SAS software

set xmmscripts = ${XMMTOOLS} # make sure the proper xmmscripts are loaded

set obsid = '0147190101'

set datadir = /eu/X-ray/XMM/data/????/$obsid/odf

${xmmscripts}/xmmprepare --datadir=$datadir --prepdir=$obsid --pn # prepare the data

${xmmscripts}/xmmextract --prepdir=$obsid --pn --full # full extraction of the chip

${xmmscripts}/xmmextract --prepdir=$obsid --pn --srcreg=src.fits --bkgreg=bkg.fits # source and background extraction of the chip

Note that it is usually better to have the data preparation and the extraction in separate scripts (since one usually prepares once and then tries several extractions with different settings).

The xmmscripts need the region files to be fits files. You can convert them via

ds9tocxc outset=outfilename.fits < infilename.reg

For simple extractions of point sources creating region files is not necessary, you can just give the position of the source and background region as well as their extraction radii with the "--ra", "--dec" and "--bkgra", "--bkgdec" arguments.

"xmmextract" is a very powerful script, use its "--help" argument to get a list of all arguments.

Further important points:

- If your observation includes sub-observations, choose one of them. Do this with "onlyexp=1" not "onlyexp=PNU001" for example.

- Since our Calibration database gets updated frequently, we ran into the problem that new calibration files only like the latest SAS. As it is very problematic to just install the new SAS, there is a workaround. You have to address the calibration files that are working with our SAS version until the new SAS is installed (we must be patient as this is not trivial). The following, however, works perfectly:

example:

#!/bin/csh

${XMMTOOLS}/xmmprepare --datadir=/eu/X-ray/XMM/data/2290/0679780201/odf/

--prepdir=./test --pn --analysisdate=2014-01-11T01:05:00.000

XMM OM Data Extraction

For extracting the data of the Optical Monitor (OM) onboard XMM, there exist three tools which do this automatically: omichain, omfchain and omgchain. "i" stands for image mode, "f" for fast mode and "g" for grism mode. Which mode you have can be found on the XMM log browse site: http://xmm2.esac.esa.int/external/xmm_obs_info/obs_view_frame.shtml. If you want to extract your images you first have to set some paths:

$source $SOFTDIR/sas_init.csh

$setenv SAS_ODF path_to_your_data #normally /eu/X-ray/XMM/data/rev/ObsID/odf/

$cifbuild

$setenv SAS_CCF ccf.cif

$odfingest

$setenv SAS_ODF `ls -1 *SUM.SAS`

After this you can run

$omichain #or omfchain, omgchain

You can also do the extraction step-by-step. For a description see http://xmm.esac.esa.int/sas/current/documentation/threads/omi_stepbystep.shtml.

At the end you should have several images (*IMAGE*), region files (*REGION*) and source lists (*SWSRLI*) for the OM exposures and filters. The nomenclatur for the files are:

- IOOOOOOOOOODDUEEETTTTTTSXXX.FFF or POOOOOOOOOODDUEEETTTTTTSXXX.FFF (for PPS product files)

- OOOOOOOOOO = XMM-ObsID (10 characters)

- DD = Data source idetifier (here OM)

- U = exposure flag (S = sched, U = unsched, X = not aplicable)

- EEE = exposure number within the instrument observation (3 characters)

- TTTTTT = product type (6 characters)

- S = 0 or data subset number/character

- XXX = source number or slew step number (3 characters)

- FFF = file format (3 characters)

From all exposures for one filter a mosaiced sky image is produced which contains *SIMAG?.FIT. The ? stands for the filter: L = UVW1, M = UVM2, S = UVW2, B = B, U = U, V = V. Open the image with ds9 and have a look if your source is seen and where it is. You can check this by loading a region file, produced e.g. by simbad2ds9, into the image.

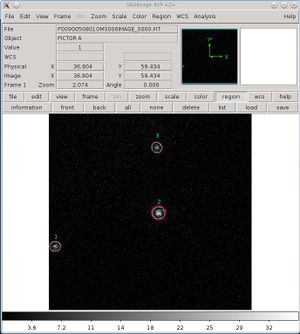

Then check the detector images (P*IMAGE_*000.FIT) for your source (it can be, that some detector images don't contain your source because the window was off). Load the region file which belongs to the detector image (*REGION*000.ASC, e.g. P0090050801OMS006IMAGE_0000.FIT -> I0090050801OMS006REGION0000.ASC) and identify the number of your source.

Look into the source list for your exposure (P*SWSRLI*.FIT) and note the SRC_ID in the last column. Open the combined source list (*COMBO*) and search for your SRC_ID. Here you can find all the magnitudes and fluxes of your source for the different filters.

Choosing correct XMM-EPIC filter

Choosing the correct EPIC filter for your observation depends on the visual magnitudes of the other objects (usually stars) in the EPIC field of view.

The XMM user's handbook (sect. 3.3.6; http://xmm.esac.esa.int/external/xmm_user_support/documentation/uhb/epicfilters.html) recommends:

Thick filter: suppress up to magnitudes of -2 to -1 (pn), or +1 to +4 (mos)

Medium filter: if there is nothing above magnitude ~ 6 to 9

Thin filter: for point sources that are 12 mag fainter than thick filter limitations

The UHB notes that these magnitudes apply when you use the full frame mode; if you use a partial window mode, then sources up to 2-3 magnitudes brighter than these values should be okay.

Use the United States Naval Observatory catalog at http://www.nofs.navy.mil/data/fchpix/ to find the stars within a 9' radius.

At that website, enter your R.A. and Decl., and for the output file options in the left column, unclick everything except RA,DEC, magnitude, and offset from center. Then click 'retrieve data' in the right column.

Next window: Click on "USNO B1.0 Star List" to download a gzipped ASCII file with typically hundreds of rows/stars for lines of sight away from the Galactic Plane (or many thousands of rows/stars for lines of sight in the Galactic Plane).

Example file: See /home/markowitz/proposals/xmm2014/GS1826USNO for a Galactic XRB, GS1826, located in the Galactic Bulge (optically-crowded fields).

The star list is ordered by R.A., and but you can then re-order the ASCII list by magnitude, e.g.,

cat GS1826USNO | grep -v '#' | awk '{print $7 " " $12}' | sort -g > starlist_sortB

cat GS1826USNO | grep -v '#' | awk '{print $8 " " $12}' | sort -g > starlist_sortR

since the B and R magnitudes are columns 7 and 8, and distance from the center of f.o.v. (in arcsec) is column 12

Ignore the rows with zero magnitudes; a zero means unmeasured flux.

For these example files, e.g., /home/markowitz/proposals/xmm2014/starlist_sortB we see that the brightest B- and R-band magnitudes are 10.0-10.3, including some objects that are only 3-5' from the center, and at least one that's only 1.2' from the center, so one cannot use the thin epic filter; one must use the MEDIUM filter for all three EPIC cameras.

XMM ESAS

If you are interested in diffuse X-ray emission it is recommended to use the XMM-Newton Extended Source Analysis Software. The manual can be found at [1] with very detailed step-by-step instructions.

Working with XMM ESAS in the remeis environment

Before you start with the data analysis you have to source the SAS init via source $SOFTDIR/sas_init.csh. Since you will need this every time you start a new terminal it is recommended to add an alias to your .cshrc in your home directory, e.g. alias setsas 'source $SOFTDIR/sas_init.csh'.

First you have to find out the observation data path in the archives, which can be done by typing find /eu/X-ray/xmm/data/????/obsID/odf -type d, where you replace obsID with your desired observation. Next, you have to set up the environment variables for the SAS tasks to function properly.

Create an analysis directory somewhere in your userdata (usually one analyzed observation has > 1 GB so don't use your home). Set the following environment variables:

setenv SAS_ODF DIR - where DIR is the observation data path from above.

setenv SAS_CCF ANALYSIS_DIR/ccf.cif - where ANALYSIS_DIR is your directory for the analysis (on userdata), you have to add '/ccf.cif' to this path. This will create the ccf.cif file in your directory when running cifbuild

setenv CALDB_ESAS /userdata/data/knies/XMM/CALDB_ESAS (*)

* You need additional calibration files for ESAS. The latest version can be found at /userdata/data/knies/XMM/CALDB_ESAS. Please use this directory instead of downloading it again to safe disk space.

Next you have to run the basic tasks to prepare the data, but with some different options since we are working in the remeis environment:

cifbuild withccfpath=no analysisdate=now category=XMMCCF calindexset=$SAS_CCF fullpath=yes

odfingest outdir=ANALYSIS_DIR

Important: you can't move your files after running these steps or you will get errors.

After odfingest is finished there should be a file ending with "SUM.SAS" in your directory. Note the filename and re-set the SAS_ODF variable:

setenv SAS_ODF ANALYSIS_DIR/*SUM.SAS - replace *SUM.SAS with the filename in your directory

After this step you can follow the ESAS manual normally, starting with [2]. Note that you have to do cifbuild and odfingest only once (and also when SAS was updated) but you have to set the environment variables each time you open a new terminal. You can make a small shell script which you can execute each time before you continue your analysis to speed this up, e.g.:

#!/bin/tcsh -f

source $SOFTDIR/sas_init.csh

setenv SAS_ODF ANALYSIS_DIR/*SUM.SAS

setenv SAS_CCF ANALYSIS_DIR/ccf.cif

setenv CALDB_ESAS /userdata/data/knies/XMM/CALDB_ESAS

Replace the directories etc. like shown above, make your script executable with chmod +x scriptfile and then execute it with ./scriptfile.